Unsupervised learning is a branch of machine learning that learns from test data that has not been labeled, classified or categorized. 🤖 Logistic Regression - examples: microchip fitness detection, handwritten digits recognitions using one-vs-all approach. Usage examples: spam-filters, language detection, finding similar documents, handwritten letters recognition, etc. In classification problems we split input examples by certain characteristic.

🤖 Linear Regression - example: house prices prediction. Usage examples: stock price forecast, sales analysis, dependency of any number, etc. Basically we try to draw a line/plane/n-dimensional plane along the training examples. In regression problems we do real value predictions. The ultimate purpose is to find such model parameters that will successfully continue correct input→output mapping (predictions) even for new input examples. Then we're training our model (machine learning algorithm parameters) to map the input to the output correctly (to do correct prediction). In supervised learning we have a set of training data as an input and a set of labels or "correct answers" for each training set as an output. In most cases the explanations are based on this great machine learning course. The purpose of this repository was not to implement machine learning algorithms using 3 rd party libraries or Octave/MatLab "one-liners" but rather to practice and to better understand the mathematics behind each algorithm.

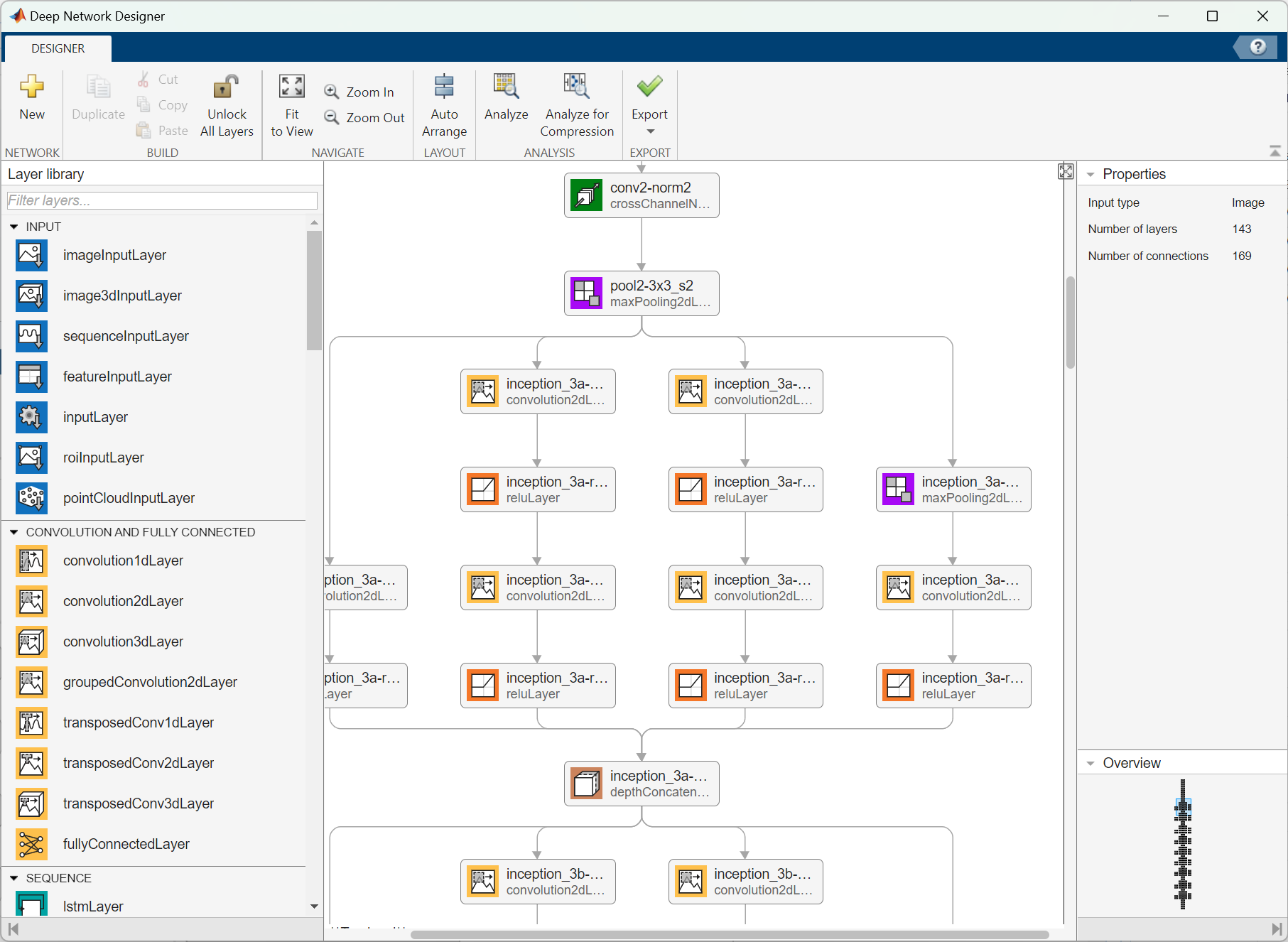

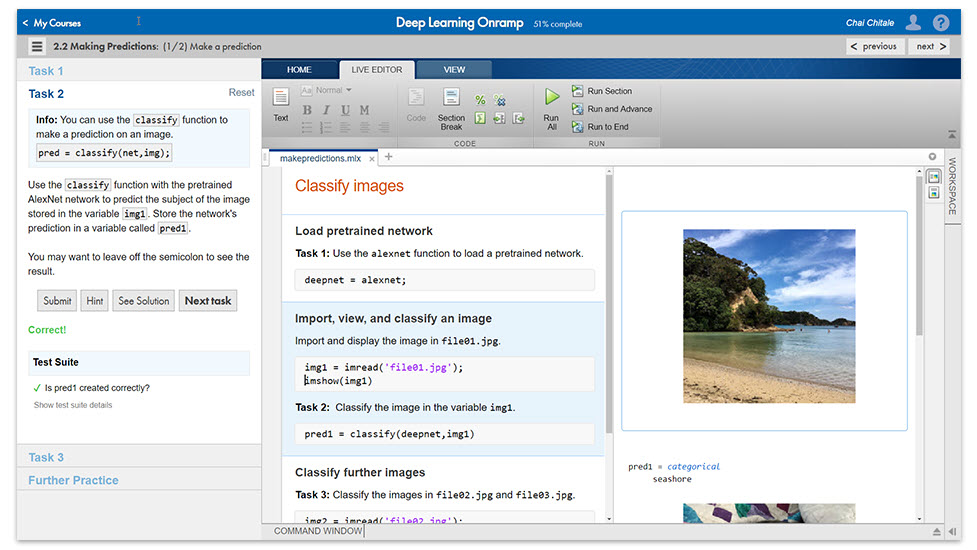

This repository contains MatLab/Octave examples of popular machine learning algorithms with code examples and mathematics behind them being explained. For Python/Jupyter version of this repository please check homemade-machine-learning project.

0 kommentar(er)

0 kommentar(er)